Disclaimer: Opinions expressed are solely my own and do not reflect the views or opinions of my employer or any other affiliated entities. Any sponsored content featured on this blog is independent and does not imply endorsement by, nor relationship with, my employer or affiliated organisations.

1. Introduction

The AI SOC market is growing fast and there are products on it that are doing serious work. Some of them have strong integration capabilities, solid reasoning engines, and response actions that actually execute in production. The market has come a long way in four years.

But there is a problem with how we evaluate these products.

When every vendor says "AI-powered response," that phrase covers everything from a fully autonomous isolation workflow to a chatbot that suggests you maybe think about resetting a password. Both get the same label in the marketing material. Both show up in the same analyst reports. And when a security team sits down to compare three products, they have no standardized way to measure the gap between "our AI handles response" and what that actually means in operational terms.

Some products are close to real autonomy in specific domains. Some are strong in analysis but thin on execution. Some have broad coverage but almost nothing runs without human approval. These are all valid positions on a maturity spectrum. The problem is that there is no shared framework to place them on that spectrum consistently.

So we built one.

We call it ARMM. And yes, the name is intentional.

A decade ago, the SOAR generation solved half the problem. We built the arms. Playbooks, integrations, automated response workflows. The execution layer was there. What was missing was the brain. Every decision tree was hand-coded. Every branching logic was written by an engineer who had to anticipate every possible scenario. The arms moved, but only along rails that humans laid down manually. When the scenario deviated from the playbook, the arm froze.

Now the AI SOC generation has solved the other half. We built the brain. LLMs reason across alerts, correlate context, analyze logs, and make judgment calls that no static playbook could replicate. But somewhere along the way, a lot of products forgot to attach the arms. The reasoning is strong. The analysis is sharp. And then it hands you a summary and says "here is what you should probably do." The brain thinks. The arm does not move.

ARMM evaluates both. The reasoning quality, the decision-making maturity, the trust you can place in the AI's judgment. And the response capability, the execution depth, the ability to actually take action without three humans supervising. It weighs the arm heavier because that is where the industry gap is widest right now. But it does not ignore the brain, because an arm without a brain is just a SOAR playbook and we already know how that story ended.

ARMM is a structured scoring system for evaluating what an AI SOC solution can actually do in the response layer. It covers 80+ response capabilities across six domains: Identity, Network, Endpoint, Cloud, SaaS, and General Options. And it provides a common language so that when someone says "we handle response," there is a way to ask: at what level, across how many actions, and with what degree of autonomy?

The CyberSec Automation Blog has published over a dozen articles and podcast episodes covering what makes a good automation program succeed, how to evaluate tools, and how to structure decision-making around security automation purchases. We have built tool comparison lists, evaluation checklists, and decision frameworks. ARMM is the next step in that work.

2. Why Another Framework

Most existing evaluation methods for AI SOC solutions are either vendor-produced (and therefore biased toward their own capabilities) or too generic to capture the specific nuances of AI-driven response. Analyst reports compare products at a feature-list level without measuring automation depth. Vendor demos show best-case scenarios without exposing the operational friction underneath.

Our focus is narrow and deliberate: response capabilities. Most AI SOC solutions already deliver strong reporting and analysis features. They can summarize alerts, correlate indicators, and reduce false negatives in a mature environment (we emphasize mature because these solutions need access to quality logs and, in more advanced implementations, to organizational documentation and environment-specific context). Where the industry needs structured evaluation is in the response layer: the actions an AI SOC solution can take, how autonomously it can take them, and under what conditions.

We acknowledge that some of the capabilities listed in this framework may seem aspirational at this stage. That is by design. The framework is intended to serve both as a current-state evaluation tool and as a forward-looking roadmap.

We are not scoring specific vendors. The goal is to establish a shared methodology that allows security teams to answer questions such as:

Which solution provides more relevant response capabilities for my environment?

Which solution operates at a higher level of autonomy for the actions that matter to my program?

Which solution can help me reduce my alert backlog without requiring additional headcount?

For product managers working on AI SOC products, the framework serves as a competitive analysis baseline:

Where is my competition positioned, and what capabilities are driving their wins?

What high-value capabilities are underserved across the market?

Am I investing engineering resources in features that security practitioners actually prioritize?

Because this is a fast-moving space, we are starting at version 0.1. This is a living document. Version 1.0 will be designated when the framework reaches a level of stability and community validation that warrants it.

3. Scoring Methodology

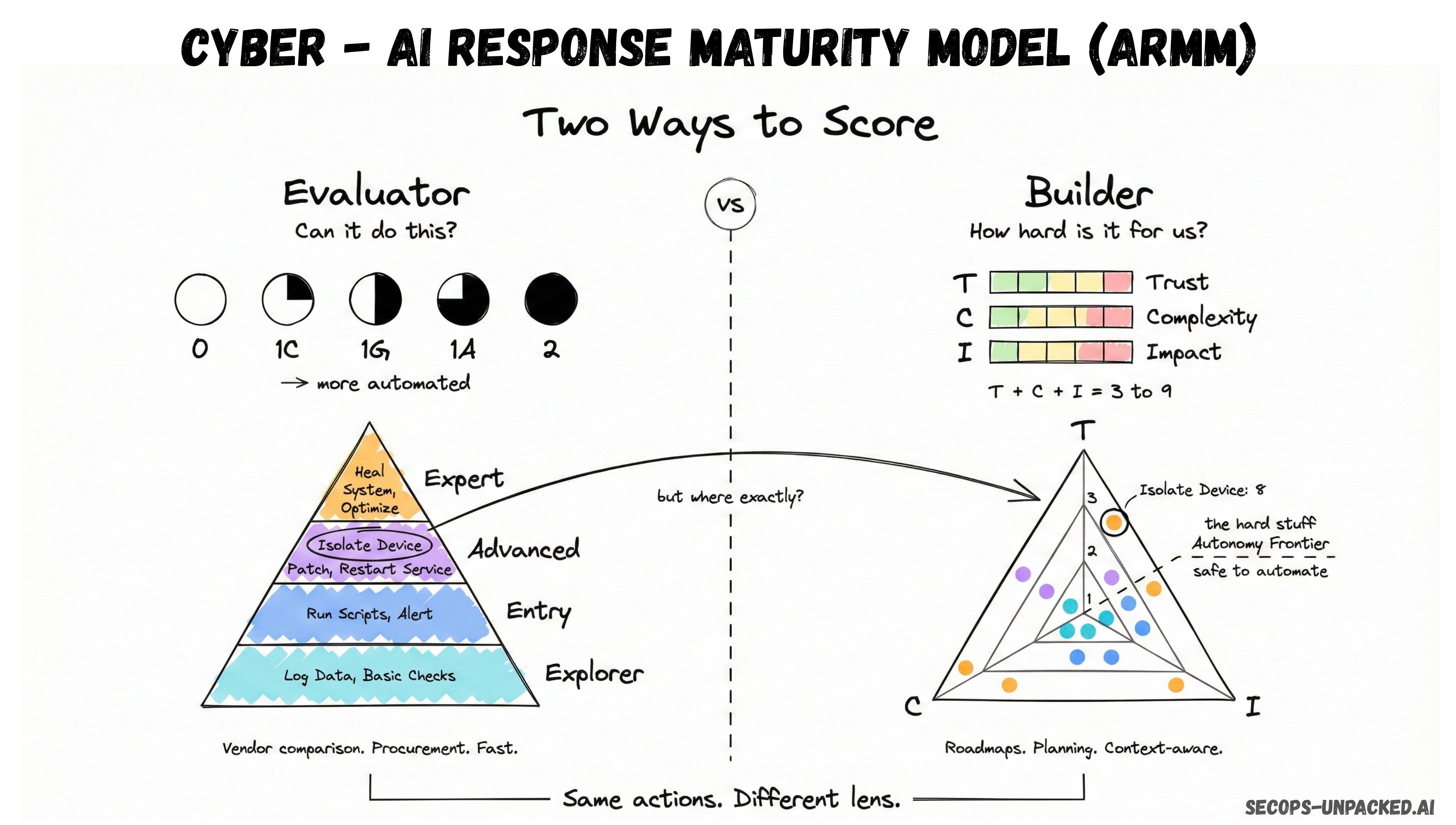

ARMM supports two distinct approaches to scoring, each designed for a different operational question.

Evaluator Mode is the straightforward path. You score each capability on the 0-1-2 scale described above (with the 1C, 1G, 1A sub-levels) and the framework calculates your coverage rate, automation depth, and per-plane breakdown. The tier placements come from ARMM's reference tables. You do not need to factor in your organizational context. This mode answers one question: given two or more AI SOC products, which one covers more of what I need and at what automation level? It is built for procurement teams, SOC managers running vendor evaluations, and anyone who needs a side-by-side comparison without spending weeks on it.

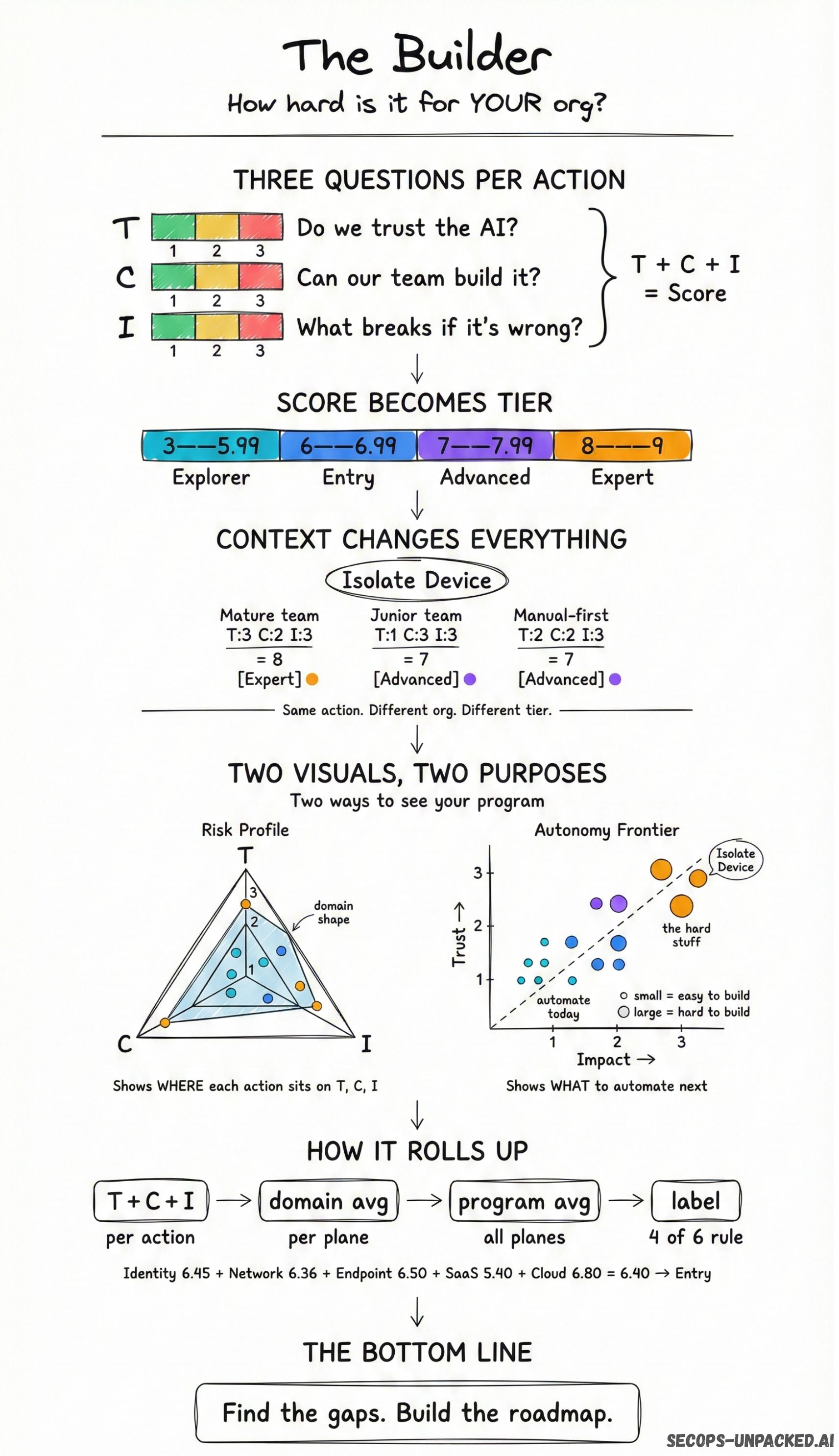

Builder Mode adds a second scoring layer on top. Instead of relying on fixed reference tiers, you score each action across three axes: Trust (how much confidence does your implementation warrant), Complexity (how hard is it for your specific team to build and maintain), and Impact (what is the blast radius if something goes wrong). The action score becomes T + C + I, and the tier placement shifts based on your organizational reality. The same action that scores Entry for a mature team with established automation pipelines might score Explorer for a team that is deploying its first AI SOC integration. This mode answers a different question: given my team, my environment, and my risk tolerance, where should I invest engineering effort to move up the maturity ladder? It is built for product managers, engineering leads, and internal SOC teams running their own automation programs.

Both modes evaluate the same six planes and the same 80+ response capabilities. Both produce per-plane breakdowns and a composite maturity label. The difference is whether you want a product-level comparison (Evaluator) or an environment-aware implementation roadmap (Builder). The public ARMM app at armm.secops-unpacked.ai supports both.

3.1 The Capability Scoring System (0-1-2)

Each response capability in the framework is scored on a three-level scale that measures the degree of automation available:

0 (Not Available): The feature does not exist in the product. There is no mechanism, manual or automated, to perform this action through the AI SOC solution.

1 (Available with Human Involvement): The feature exists but requires some form of human interaction before execution. Because human involvement can range from full collaboration to a simple approval click, this level is subdivided into three sub-categories:

1C (Collaborator): The solution requires continuous back-and-forth interaction with an analyst to reach a response action. The AI acts as a partner, not an autonomous agent.

1G (Guide): The solution generates a plan and presents options for a specific action, but it is not confident in recommending a single path. It lays out alternatives and lets the analyst choose.

1A (Approver): The action is essentially ready to execute. The AI has determined the correct response and prepared the action, but requires a human to click approve before it fires. This is the closest step to full automation while still keeping a human in the loop.

2 (Fully Automated): The action is performed without any human involvement. The vendor (or internal implementation) has demonstrated that the AI SOC solution can execute this action with sufficient confidence that no human review is required. At the time of writing, level 2 is exceptionally rare for most response categories. The framework includes it to establish the target state and to differentiate products that are moving in that direction from those that are not.

3.2 The Three Scoring Axes (Builder Mode)

In Builder Mode, each response action is evaluated across three dimensions:

Axis 1: Decision Fidelity and Programmatic Trust (T)

This axis measures the confidence level warranted by the AI SOC implementation. It correlates directly with implementation quality: reasoning log depth, context-aware decision-making, and guardrails against hallucination.

T = 1 (Enrichment): AI output assists human-led investigations. The AI provides context and data but does not recommend or execute actions.

T = 2 (Validated): AI recommends a specific action. A human confirms before execution occurs.

T = 3 (Autonomous): AI executes without human intervention. This requires the highest level of implementation maturity and organizational trust.

Axis 2: Implementation and Maintenance Complexity (C)

This axis evaluates the technical friction in building and sustaining the automation, relative to the skills and resources of the team responsible for it. This is deliberately team-dependent. An automation rated C = 3 for a junior team may be C = 2 for a team of specialized AI engineers with established CI/CD pipelines for their playbooks.

C = 1 (Low): Simple API calls or native integrations with minimal configuration.

C = 2 (Medium): Multi-step orchestration across multiple systems requiring coordination and testing.

C = 3 (High): Complex behavioral baselining, legacy system integration, or custom model tuning.

Axis 3: Operational Impact and Blast Radius (I)

This axis captures the business risk associated with the action. It is typically the most stable axis across organizations, but shifts based on asset criticality. Isolating a standard employee laptop has a different blast radius than isolating a production database server.

I = 1 (Low): Negligible disruption. Background scans, tagging, enrichment activities.

I = 2 (Medium): Temporary disruption. Resetting a standard user session, blocking a non-critical port.

I = 3 (High): Significant downtime, data loss risk, or reputational damage. Production system changes, VIP account modifications, critical infrastructure alterations.

3.3 The Maturity Computation Logic

The scoring system builds from individual actions up to a full program assessment through five layers. Each layer uses a defined formula.

Layer 1: Action-Level Score (S)

For a single response action, the score is the sum of its three axis values:

The minimum possible score is 3 (T=1, C=1, I=1). The maximum is 9 (T=3, C=3, I=3).

Layer 2: Tier Mapping

The action score maps to one of four maturity tiers:

Score Range | Tier | Description |

|---|---|---|

3.00 to 5.99 | Explorer | Foundational; low-risk quick wins with minimal blast radius |

6.00 to 6.99 | Entry | Stabilized; moderate effort and impact, suitable for early-stage programs |

7.00 to 7.99 | Advanced | Mature; requires high-fidelity reasoning and established trust |

8.00 to 9.00 | Expert | Critical; high blast radius, autonomous VIP handling, or production-critical actions |

Layer 3: Domain Maturity Score (D)

The maturity score for a specific domain (e.g., Endpoint, Identity) is the arithmetic mean of all action scores within that domain:

Where n is the number of scored actions in the domain. The resulting D value maps to a tier using the same thresholds from Layer 2.

Layer 4: Program Maturity Score (P)

The overall program score is the arithmetic mean of all domain scores, with equal weighting across all six planes:

Equal plane weighting is a deliberate design choice. It prevents planes with more actions (Endpoint has 22, SaaS has 10) from dominating the evaluation. Each plane contributes exactly one-sixth of the overall score.

Layer 5: Composite Maturity Label

The composite label is not derived from the program score directly. It uses sequential gating logic:

The composite label equals the highest tier where at least four out of six planes independently meet that tier's threshold, and the qualification chain is unbroken from Explorer upward. A product cannot be labeled Advanced if it has gaps at the Explorer tier.

The four-out-of-six rule is intentionally forgiving. A product focused on cloud-native environments may legitimately deprioritize network-level response. That should not disqualify it from a meaningful composite label. But it still needs breadth across most planes to earn a higher tier.

3.4 Context-Aware Scoring: Why Environment Matters

The ARMM recognizes that the maturity level of an automated action is not a static property of the feature itself. It is an emergent property of the environment where it is applied. The three axes (T, C, I) are all subject to organizational variance, which means the same product capability produces different scores in different contexts.

Example: "Isolate Device" evaluated by three different organizations using the same AI SOC product:

Context | Trust (T) | Complexity (C) | Impact (I) | Score | Tier |

|---|---|---|---|---|---|

Org A: Mature Program / Expert Team | 3 | 2 | 3 | 8 | Expert |

Org B: New Program / Junior Team | 1 | 3 | 3 | 7 | Advanced |

Org C: High-Risk Assets / Manual-First | 2 | 2 | 3 | 7 | Advanced |

The product capability is identical across all three. The scores differ because the Trust axis reflects implementation maturity, the Complexity axis reflects team capability, and the Impact axis (while stable here) can shift based on asset criticality. A vendor benchmark alone is insufficient. Builder Mode exists specifically to capture this variance.

4. Response Capability Domains

The framework organizes response capabilities into six domains. The first five (Identity, Network, Endpoint, Cloud, SaaS) cover specific technical response planes. The sixth (General Options / Usability) covers platform-level characteristics that affect the operational quality of the solution independent of any specific response action.

For the first five domains, each capability is scored using the 0-1-2 system described in Section 3.1 (Evaluator Mode) or the T+C+I system described in Section 3.3 (Builder Mode). For the General Options domain, the scoring criteria shift slightly: 0 means the feature is not available, 1 means the feature is available but limited in capability or partially implemented, and 2 means the feature is fully available, functional, and tested.

4.1 Identity Response Plane

Identity-related response actions target user accounts, service principals, groups, and access permissions. These actions are among the most commonly needed in incident response and are often the first automation candidates for SOC teams.

Action | Description |

|---|---|

Reset Password | Reset a standard user's password |

Revoke Sessions | Terminate all active sessions for a user account |

Disable User | Disable a standard user account |

Disable Service Principals | Disable a service account, service principal, or managed identity |

Remove Permissions | Remove a specific set of permissions from an account |

Group Adherence | Add or remove an account from a security group |

Group Creation | Create a new security group |

Token Rotation | Create or rotate secrets and tokens |

Delete Sharing Permissions | Remove sharing permissions on resources |

Label User (Tagging) | Apply a tag or label to a user account for tracking |

Builder Mode Reference Scoring (Mature AI SOC Program, Skilled Engineering Team):

Action | T | C | I | Score | Tier |

|---|---|---|---|---|---|

Group Adherence | 3 | 1 | 1 | 5 | Explorer |

Label User (Tagging) | 3 | 1 | 1 | 5 | Explorer |

Revoke Sessions | 3 | 1 | 1 | 5 | Explorer |

Reset Password (Std) | 3 | 1 | 2 | 6 | Entry |

Disable Standard User | 3 | 1 | 2 | 6 | Entry |

Delete Sharing Permissions | 2 | 2 | 2 | 6 | Entry |

Remove Specific Permissions | 2 | 2 | 3 | 7 | Advanced |

Group Creation | 3 | 2 | 2 | 7 | Advanced |

Disable Service Principals | 2 | 2 | 3 | 7 | Advanced |

Reset VIP Password | 3 | 2 | 3 | 8 | Expert |

Rotate Secrets (Prod) | 2 | 3 | 3 | 8 | Expert |

4.2 Network Response Plane

Network-level response actions modify traffic flow, access control, and device connectivity. These are often high-impact actions with significant blast radius, making the Trust and Impact axes particularly important in scoring.

Action | Description |

|---|---|

ACL Creation | Create a new access control list on the network |

VLAN Creation | Create a new VLAN on the network |

Firewall Rule Creation | Create a new firewall rule |

IPS Rule Creation | Create a new IPS rule in deny mode |

Network Connection Reset | Reset a network connection |

DNS Entry Change | Modify an entry in the DNS records |

Routing Table Change | Modify a routing entry |

Sinkhole Traffic | Redirect traffic to a sinkhole |

Rate Limit Traffic | Limit traffic by a particular indicator |

VLAN Modification | Move a device to a restricted VLAN |

Quarantine Device | Quarantine a device at the network level |

Quarantine Server | Quarantine a server running an enterprise-level service |

Modify NAT Rules | Change NAT rules to modify traffic patterns |

Builder Mode Reference Scoring:

Action | T | C | I | Score | Tier |

|---|---|---|---|---|---|

Network Connection Reset | 3 | 1 | 1 | 5 | Explorer |

Sinkhole Traffic | 3 | 1 | 1 | 5 | Explorer |

Rate Limit Traffic | 3 | 1 | 1 | 5 | Explorer |

ACL Creation | 2 | 2 | 2 | 6 | Entry |

Quarantine Device | 3 | 1 | 2 | 6 | Entry |

Firewall Rule Creation | 2 | 1 | 3 | 6 | Entry |

DNS Entry Change | 2 | 2 | 2 | 6 | Entry |

Modify NAT Rules | 2 | 2 | 2 | 6 | Entry |

IPS Rule Creation | 2 | 2 | 3 | 7 | Advanced |

VLAN Creation | 3 | 2 | 2 | 7 | Advanced |

VLAN Modification | 3 | 2 | 3 | 8 | Expert |

Routing Table Change | 3 | 3 | 3 | 9 | Expert |

Quarantine Server | 2 | 3 | 3 | 8 | Expert |

4.3 Endpoint Response Plane

Endpoint response actions operate directly on devices and their software environment. This domain has the largest number of capabilities because endpoint response spans file operations, process management, application control, forensics, and OS-level changes.

Action | Description |

|---|---|

Isolate Device | Isolate a device from all network connectivity |

Initiate Malware Scan | Start a scan on the device |

Grab File from Device | Upload a file to a designated container |

Submit File to Sandbox | Submit a file for sandbox analysis |

Lock Out User | Lock a user out of the device |

Remove User from Device | Remove a user account from the device |

Delete Files | Delete specific files from the device |

Kill Processes | Terminate a running process |

Remove Application | Uninstall an application |

Remove Browser Extension | Remove a browser extension |

Modify Browser Settings | Set, modify, or replace browser security parameters |

Remove Scheduled Task | Remove a cron entry or scheduled task |

Remove Startup Items | Remove a process, agent, or file from system startup |

Remove Library / Package | Remove a library from a development environment |

Upgrade Application | Force an automatic update on installed software |

Upgrade OS | Force an automatic OS update |

Deploy Script | Deploy a script or application needed for remediation |

Modify Registry Key | Change a value or create a new registry key |

Disable Service | Change the status of or remove a service |

Collect Memory Dump | Initiate and retrieve a memory dump forensically |

Clear Browser Cache | Remove all files, cookies, and data from the browser cache |

Remove Device from Domain | Remove a device from the domain |

Builder Mode Reference Scoring:

Action | T | C | I | Score | Tier |

|---|---|---|---|---|---|

Initiate Malware Scan | 3 | 1 | 1 | 5 | Explorer |

Clear Browser Cache | 3 | 1 | 1 | 5 | Explorer |

Grab File from Device | 3 | 1 | 1 | 5 | Explorer |

Collect Memory Dump | 2 | 2 | 1 | 5 | Explorer |

Submit File to Sandbox | 3 | 1 | 1 | 5 | Explorer |

Kill Processes | 3 | 1 | 2 | 6 | Entry |

Block File (via Hash) | 3 | 1 | 2 | 6 | Entry |

Lock Out User | 2 | 2 | 2 | 6 | Entry |

Remove Browser Extension | 3 | 1 | 2 | 6 | Entry |

Remove Scheduled Task | 2 | 2 | 2 | 6 | Entry |

Remove Startup Items | 2 | 2 | 2 | 6 | Entry |

Disable Service | 2 | 2 | 2 | 6 | Entry |

Delete Files | 2 | 2 | 2 | 6 | Entry |

Modify Browser Settings | 2 | 2 | 2 | 6 | Entry |

Remove Application | 2 | 2 | 3 | 7 | Advanced |

Remove User from Device | 2 | 2 | 3 | 7 | Advanced |

Remove Library / Package | 2 | 2 | 3 | 7 | Advanced |

Modify Registry Key | 2 | 3 | 3 | 8 | Expert |

Isolate Device | 3 | 2 | 3 | 8 | Expert |

Remove Device from Domain | 2 | 3 | 3 | 8 | Expert |

Upgrade Application | 2 | 3 | 3 | 8 | Expert |

Upgrade OS | 2 | 3 | 3 | 8 | Expert |

Deploy Script | 3 | 3 | 3 | 9 | Expert |

4.4 Cloud Response Plane

Cloud response actions target infrastructure resources, access controls, and storage in cloud environments. The blast radius of cloud actions can be particularly severe because a single misconfigured change can affect multiple dependent services.

Action | Description |

|---|---|

Modify Security Group Rules | Modify firewall rules on a cloud resource to restrict access |

Create Security Group | Create a new security group and apply it to restrict traffic |

Isolate Resource | Quarantine a cloud resource so it is unreachable |

Modify Access Type | Switch a resource from public to private or restrict anonymous access |

Remove Permissions to Resource | Remove a service principal or managed identity from accessing a resource |

Delete Resource | Delete a resource from the cloud environment |

Stop Resource | Stop a resource from execution |

Modify KeyVault Entries | Add or modify resources in a KeyVault |

Use Breakglass Account | Use a breakglass account in case of emergency |

Remove Files from Storage | Remove files from a storage bucket or storage account |

Copy Storage Device | Create a copy of a cloud storage resource for forensic investigation |

Mount Storage Device | Mount a new storage capability to a VM for forensic investigation |

Snapshot VM | Create a snapshot of the current state of a virtual machine |

Enable Diagnostic Settings | Alter settings that enable advanced log gathering |

Apply Resource Lock | Make the resource immutable or read-only |

Builder Mode Reference Scoring:

Action | T | C | I | Score | Tier |

|---|---|---|---|---|---|

Enable Diagnostic Settings | 3 | 1 | 1 | 5 | Explorer |

Apply Resource Lock | 3 | 1 | 1 | 5 | Explorer |

Snapshot VM | 3 | 1 | 1 | 5 | Explorer |

Stop Resource | 3 | 1 | 2 | 6 | Entry |

Modify Security Group Rules | 2 | 2 | 2 | 6 | Entry |

Create Security Group | 2 | 2 | 2 | 6 | Entry |

Remove Permissions to Resource | 2 | 2 | 2 | 6 | Entry |

Copy Storage Device | 2 | 2 | 2 | 6 | Entry |

Mount Storage Device | 2 | 2 | 2 | 6 | Entry |

Modify Access Type | 2 | 2 | 3 | 7 | Advanced |

Isolate Resource | 3 | 2 | 3 | 8 | Expert |

Remove Files from Storage | 2 | 2 | 3 | 7 | Advanced |

Modify KeyVault Entries | 2 | 3 | 3 | 8 | Expert |

Use Breakglass Account | 2 | 3 | 3 | 8 | Expert |

Delete Resource | 2 | 3 | 3 | 8 | Expert |

4.5 SaaS Response Plane

SaaS response actions focus primarily on email and productivity platforms, which are among the most common attack surfaces in enterprise environments. Actions in this domain directly affect end-user workflows and communications.

Action | Description |

|---|---|

Delete Email | Remove an email from a user's mailbox |

Quarantine Email | Move an email to the user's quarantine or junk box |

Create Routing Rules | Create rules to handle and route incoming email |

Grab Email Sample | Extract an attached file from an email |

Grab Email Link | Extract a link from inside an email message |

Add / Remove Meeting Invite | Modify a user's calendar |

Read / Modify User Status | Read or change a user's status in the HR platform |

Disable Malicious Inbox Rule | Disable a rule created by a malicious actor from a user's mailbox |

Block Sender | Block a sender from the domain |

Modify HR Records | Modify HR records in the system beyond status |

Builder Mode Reference Scoring:

Action | T | C | I | Score | Tier |

|---|---|---|---|---|---|

Enable Diagnostic Settings | 3 | 1 | 1 | 5 | Explorer |

Apply Resource Lock | 3 | 1 | 1 | 5 | Explorer |

Snapshot VM | 3 | 1 | 1 | 5 | Explorer |

Stop Resource | 3 | 1 | 2 | 6 | Entry |

Modify Security Group Rules | 2 | 2 | 2 | 6 | Entry |

Create Security Group | 2 | 2 | 2 | 6 | Entry |

Remove Permissions to Resource | 2 | 2 | 2 | 6 | Entry |

Copy Storage Device | 2 | 2 | 2 | 6 | Entry |

Mount Storage Device | 2 | 2 | 2 | 6 | Entry |

Modify Access Type | 2 | 2 | 3 | 7 | Advanced |

Isolate Resource | 3 | 2 | 3 | 8 | Expert |

Remove Files from Storage | 2 | 2 | 3 | 7 | Advanced |

Modify KeyVault Entries | 2 | 3 | 3 | 8 | Expert |

Use Breakglass Account | 2 | 3 | 3 | 8 | Expert |

Delete Resource | 2 | 3 | 3 | 8 | Expert |

4.6 General Options / Usability

This domain evaluates platform-level capabilities that are not tied to any specific response action but directly affect how useful, trustworthy, and manageable the AI SOC solution is in production. The scoring for this domain uses a modified scale: 0 means not available, 1 means available but limited, and 2 means fully available and functional.

This domain is split into two sub-categories to distinguish between operational platform features and AI-specific evaluation criteria.

Platform Operations

Action | Description |

|---|---|

Close Alerts in SIEM | The tool can close alerts in all major SIEM solutions |

Logging | Platform logging allows identification of all actions taken |

Reasoning Logging | Reasoning steps taken by the platform are logged at sufficient detail |

API Development | The API is robust enough for integration with other security tools |

Support Level | Support is responsive and allows for adequate issue resolution |

Account Management | Account management is straightforward with SSO integration |

Roles and Responsibility | Role-based access control is available with sufficient granularity |

Ease of Use (GUI) | The GUI is navigable and intuitive |

Native Chat Integration | Native integration with major communication platforms |

Alerting | Automatic alerting when platform-level or analysis-level issues arise |

Stats / Health Dashboards | Dashboards showing current platform status and performance |

AI-Specific Evaluation Criteria

Action | Description |

|---|---|

Bring Your Own Model | Ability to integrate custom models into the platform |

Context Grounding | Ability to bring organizational data to feed into the ML model |

Autonomous Action Thresholds | Platform allows setting confidence thresholds for autonomous execution |

Investigation Audit Trail | Complete, exportable record of every action (AI and human) with timestamps |

IR Metrics Tracking | Native tracking of MTTD, MTTA, MTTT, MTTI, MTTR without external tooling |

Feedback Loop Mechanism | Analysts can confirm, reject, or correct AI decisions with feedback incorporated |

Auto-Close Reversal Tracking | Tracks the rate at which auto-closed alerts are reopened by analysts |

Explainability / Decision Transparency | AI provides clear, traceable reasoning for every decision |

AI Decision Accuracy Reporting | Tracks TP accuracy, FP accuracy, and confidence scores over time |

Model Drift Detection | Monitors AI model performance and alerts when accuracy degrades |

Adversarial Robustness Testing | Supports or integrates with red team exercises to test AI resilience |

5. Aggregate Maturity Scoring

Evaluating each plane individually is necessary but not sufficient. Security teams making purchasing decisions and product managers tracking competitive positioning need a consolidated view that communicates the overall picture without hiding the details.

5.1 Automation Depth Score

This is the most operationally significant metric and the one that separates real autonomous solutions from products that wrapped a chatbot interface around a set of API calls.

Across all covered capabilities, calculate the distribution:

What percentage is fully automated (level 2)?

What percentage sits at Approver level (1A)?

What percentage sits at Guide level (1G)?

What percentage sits at Collaborator level (1C)?

What percentage is not available at all (0)?

A product could have 80% of capabilities covered but only 5% fully automated. That is a fundamentally different product than one with 60% covered but 40% fully automated. The first is broad but shallow. The second is narrower but operates with real autonomy where it counts.

Full Automation Rate: The percentage of total capabilities at level 2. This is the true measure of how much an AI SOC solution can operate without human intervention.

Coverage Rate: The percentage of total capabilities at any level above 0. This measures breadth regardless of automation depth.

The relationship between these two numbers tells you everything about how the product actually operates. A high coverage rate with a low automation rate means the product is a guided workflow tool with AI branding. A moderate coverage rate with a high automation rate relative to coverage means the product is autonomous in its areas of focus but limited in scope.

5.2 Combined Scoring Readout

A complete ARMM evaluation for a product produces the following consolidated output:

Metric | Value |

|---|---|

Overall Score | 47% (equal plane weighted) |

Composite Maturity | Entry (5 of 6 planes at Entry or above) |

Automation Depth | 12% fully automated, 61% covered at any level |

Per-Plane Breakdown:

Plane | Score | Coverage | Fully Automated | Tier |

|---|---|---|---|---|

Identity | 78% | 7/9 covered | 2 actions | Advanced |

Network | 42% | 8/13 covered | 1 action | Entry |

Endpoint | 38% | 12/21 covered | 1 action | Entry |

Cloud | 31% | 6/15 covered | 0 actions | Explorer |

SaaS | 55% | 7/10 covered | 3 actions | Entry |

General Options | 64% | 10/14 covered | 3 actions | Advanced |

6. Reading the Model

A product can reach Expert level on a specific plane by checking all the boxes for that domain. But it would be difficult to consider an AI SOC Response solution as Expert level overall if it lacks the ability to perform foundational actions like closing alerts in a SIEM. The tier system is designed to reward both depth within a domain and breadth across domains.

The reference maturity tables provided in Section 4 use example scores from a hypothetical mature AI SOC program with a skilled engineering team. These are illustrative, not universal benchmarks. The environmental dynamics described in Section 3.5 are not optional context; they are a core part of how the framework is intended to be used.

When comparing two products, the most informative comparison is not the aggregate score. It is the per-plane breakdown combined with the Automation Depth Score. Two products at the same composite tier can have radically different operational profiles. One may cover 80% of capabilities at the Collaborator level. The other may cover 50% but with 30% at full automation. These are different products for different buyers with different operational maturity levels.

7. Limitations and Future Work

This is version 0.1. The framework has known limitations:

The capability lists are not exhaustive. New response actions will emerge as AI SOC products mature and as attack surfaces expand.

The three-axis scoring (T, C, I) requires subjective judgment that will vary between evaluators. We plan to develop calibration guidelines to reduce inter-evaluator variance.

The framework does not currently weight domains differently. In practice, Identity response may be more important than Network response for a given organization. Weighted scoring is planned for a future version.

Detection and analysis capabilities are out of scope for this version. A separate framework or an extension to ARMM may address those in the future.

We have not included pricing, deployment time, or vendor lock-in considerations. These are important purchase factors but are outside the scope of a technical maturity model.

We are building a public web application where users can input their product's capabilities and generate ARMM scoring layers automatically, along with an exportable CSV. The application is available at: armm.secops-unpacked.ai

8. Conclusion

The AI SOC market is growing faster than the industry's ability to evaluate products on consistent terms. The ARMM framework provides a structured, repeatable methodology for measuring what an AI SOC solution can actually do in the response layer, how autonomously it can do it, and what it takes to deploy and maintain that capability in a specific operational environment.

The framework is built for two audiences: security teams evaluating products and product managers building them. For security teams, it provides a checklist and scoring system that cuts through marketing language and focuses on operational capability. For product teams, it provides a competitive analysis baseline and a prioritization framework for feature development.

SOAR gave us arms without brains. The first wave of AI SOC products gave us brains without arms. The products that will win this market are the ones that connect both. ARMM gives you a way to measure how far along that connection is, and where the gaps remain.

No current AI SOC solution will check every box. That is not the point. The point is to establish a common language and a common measurement system so that the conversation about AI SOC response capability is grounded in specifics rather than promises. Version 0.1 is the starting point. The framework will evolve as the market does.