Disclaimer: Opinions expressed are solely my own and do not reflect the views or opinions of my employer or any other affiliated entities. Any sponsored content featured on this blog is independent and does not imply endorsement by, nor relationship with, my employer or affiliated organisations.

Based on what I've been hearing from the cybersecurity community, many of you are asking what the core capabilities of an AI SOC platform are that you should actually be looking at. A while back, I put together a blog that went through some of the high-level steps for implementation. This time, I want to deep-dive into the core capabilities as I see them.

You've probably seen my "AI SOC Shift" maps and graphics. They do a pretty good job of outlining the core pillars these platforms are targeting. It's important to see if they relate to your problems and to understand what type of platform you really need.

First, I’ll discuss the different implementation styles. Then, we’ll discuss data ingestion and what it looks like for AI SOC platforms. Finally, we'll cover the super important knowledge graph/DB. We'll also discuss investigations and, in the end, the response and feedback loop.

This edition is sponsored by Crogl

Built for analysts. Powered by your data. Private design

▪️Faster investigations - Cut Mean Time to Investigate (MTTI) by over 60%.

▪️Analyst-first design - Augments, never replaces. Built for real SOC workflows.

▪️Operational anywhere - Cloud, on-prem, and air-gapped environments.

▪️Customer-managed AI - Privacy, control, and compliance built in.

▪️Knowledge Engine - Automate triage, collects evidence, and documents everything.

CISO/SOC/MSSP pros, we’d love to meet you.

Implementation Styles

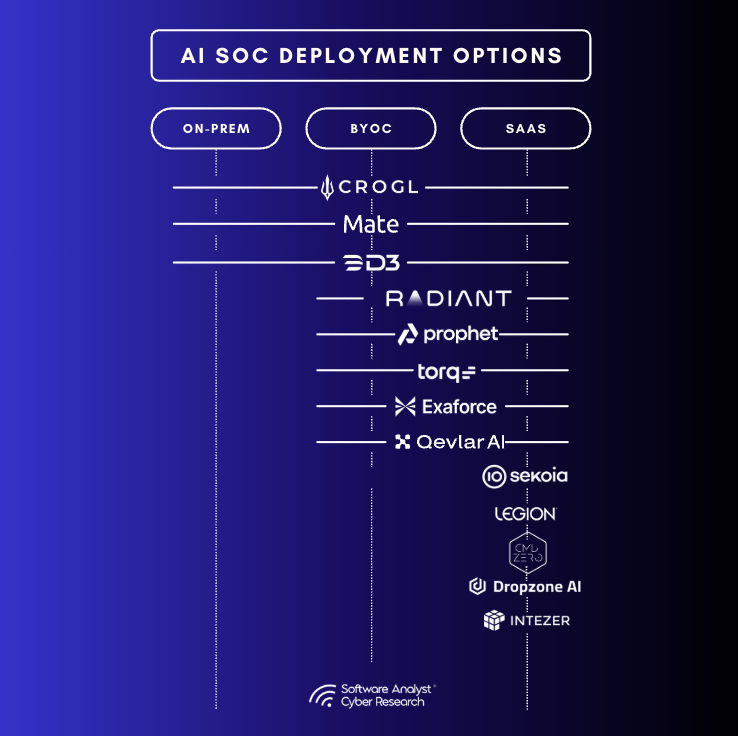

From what I’ve seen, there are a few types of implementations out there. We can start with the classic options like On-prem, Bring Your Own Cloud (BYOC), and SaaS. But what’s even more interesting is that some of these platforms now offer the option to bring your own LLM as well. There are some vendors moving into this space (check out the Vendor Spotlight section if you're interested).

I think this is going to be really relevant for large enterprises. Many of them are starting to develop their own internal LLMs and will want to use them. So, it’s a good sign that we're seeing vendors come up with capabilities that are truly enterprise-ready.

Data Ingestion

Here we have a few options as well. This is based on the vendors I’ve seen (demo, trial, or implemented), which is a bit over 30 in the AI SOC and next-gen security automation space.

First, you have the platforms that work just on top of your detections. These are the most common. They live on top of your detection layer, which can be a mix of SIEM, EDR, XDR, ITDR, Email Protection, and many others. What's important to consider here is what kind of tech they support. In my view, if it’s just about detection tech and excludes the SIEM, I want it to cover all my detection tools. Otherwise, you’re left with stuff you still need to handle manually or with your SOAR process.

For the platforms that also ingest from a SIEM, you should know that not all of them support every type of detection. This means many of the custom or unique detections you’ve built in your environment might not be supported, creating a gap.

Then there are others that live mainly on top of a SIEM or SOAR. This means you ingest everything you can into your SIEM, and the AI SOC platform takes any detection alert that comes out of it. The advantage here is that you can get really good coverage, as good as your SIEM allows. I've tried both implementations, and this one usually provides better results, assuming you can afford to get all your data into the SIEM.

One last thing here: some platforms can analyze your detections and come back with recommendations. Others will just ingest them and don’t put much effort into understanding why a rule runs in a specific way. For me, it's pretty important that the AI SOC platform tries to understand the logic of the rule, not just the alert it produces.

The Brains: Knowledge Graph/DB (Enrichment and Context)

This is a key component for any AI SOC solution. See it as the smart enrichment and knowledge automation engine that should go out and grab all the information it needs to understand an alert better.

From what I’ve seen, some platforms can connect to various tools that provide this information, from EDR, IAM, and Case Management to IaaS, Code Repos, and external threat intel feeds. Some might even give you some of that TI for free if you don’t want to bring your own.

I think this is critical for two reasons. First, to understand any alert, you need internal context. Think about whether the platform can ingest all the knowledge from your team, that means past incidents from Jira, wikis on Confluence or Notion, or documents on SharePoint. If you’re comfortable with it, see if they can even pull from specific IM channels where the team discusses cases.

Second, it's key to be able to connect to your internal case management solution like ServiceNow or Jira, or even GitHub repos. This allows the AI to check for relevant tickets or change requests related to the activity it's seeing.

So, put a lot of effort into evaluating this part. Make sure you can connect the tools you have so the platform can learn from your environment.

The Investigation Engine

This part can go into so many details that I'll probably do a follow-up blog on it. You can also check out a previous article where I walk through handling an EDR alert.

There are many different ways this is done, and many AI SOCs have their own method for stitching data together. But as far as capabilities, I’m looking for the following:

Does it use response playbooks? I want a way to define custom scenarios or import my existing playbooks into the platform. That way, the platform is aware not just of best practices but also of my internal processes. In my own testing, I tried running it without any guidance from my playbooks, and the results weren't great. The investigation quality actually got worse over time.

What's the right mix of guidance? I also tried adding my playbooks and letting the AI mainly follow that, and the results were good but a bit limited. We realized this would mean constantly updating our playbooks. The third approach, which got the best results, was to combine our internal playbooks with the platform's best practices. It understood our environment better and used all the log sources it needed to draw a conclusion. Plus, it took paths that were not in our playbooks but made sense in that specific context.

Don't fall for the speed trap. These platforms mainly run API queries or search the SIEM. This means some investigations will only be as fast as your other tech. You have API rate limits and SIEM performance limitations. (Note: if your SIEM pricing is based on the number of queries you run, an AI will go a bit wild and run many more queries than a human would).

Parallel vs. Sequential. It's also important to check if the platform runs actions in parallel or one after another. In security investigations, parallel actions don't always work because you often need to pivot based on what you find. Parallel makes sense for enrichment, but not for reactive threat hunting.

Self-Verification. And one last point: does the AI verify and check itself once it completes an investigation? I've seen that when it does, it usually comes up with better outcomes.

The Copilot. I can't forget the AI Copilot. At first, I thought it was mainly for starting a threat hunt, but I also want it available during an investigation. You should be able to pick up where the case was escalated and ask questions about specific steps or just continue the investigation yourself. It's important to make these interactions visible so all analysts can see what others have investigated. Good auditing and sharing options are key.

Taking Action: Remediation and Response

On this part, not many platforms are going all-in, mainly because response is harder to automate and it's something where you want more deterministic, predictable automation. From what I’ve seen, there are three types of capabilities:

Suggestions: They come up with response and remediation suggestions. This is the baseline I would expect. The key here is whether they understand your environment and suggest something that makes sense or just give you generic best-practice advice.

Basic Actions: These are the ones that have some simple response actions, like blocking an IP, suspecting a user session, resetting a password, doing an AI Bot interview with a user, alerting via IM, or quarantining a machine. I like this, as in some cases that’s all you need. It’s easy and convenient.

Native Automation: This is the third type, where platforms start to offer native automation capabilities like those in a SOAR platform, where you can build your own custom response workflows.

Closing the Loop: Lessons Learned

And the last part. Here we have a few capabilities to look for.

Summaries & Reports: All platforms do alert and incident summaries; it’s like a core feature. But there are different types. Some allow you to create executive reports and give them a template for how it should look. You don't want to present reports to your management that are in a different style every time, so consistency is important. Others can do a summary of specific alerts you select and let you add artifacts to tailor it.

The Feedback Loop: This is where things get interesting.

Some platforms give you no feedback mechanism. :)

Then you have the ones that offer basic suggestions, usually based on a summary report or dashboard.

Others come with suggestions on how to improve your detections. (This is my favorite,it’s what I want and I’ve even started to use it).

The ideal is a platform that gives you suggestions for both detection improvements and process improvements.

Final Thoughts

Evaluating an AI SOC platform isn't about finding a single killer feature. It's about understanding how all these different pieces, ingestion, knowledge, investigation, response, and feedback, work together. The goal isn't just to find a tool that closes alerts fast. It's to find a partner that can learn from your environment, adapt to your processes, and ultimately make your entire security program smarter. You need a system that thinks and learns with you, not just another black box.

Vendor Spotlight: Crogl

The Crogl team recently walked me through their platform, and a few things stood out that are worth calling out here.

First, Crogl is built with large enterprises in mind. You can deploy it however you want, on-prem, in your own cloud, or as a managed service, and it supports a bring-your-own LLM approach. I expect more platforms will need to support that as enterprises roll out their own internal models.

Second, Crogl takes a pragmatic view of data. Instead of forcing you to normalize everything up front, the platform builds a knowledge graph that maps fields and entities across multiple data sources (SIEM, Data Lake, EDR, S3, Log Analytics, etc.). This means analysts can pivot investigations across fragmented environments without waiting for rigid schemas to be enforced.

Third, response plans in Crogl aren’t static playbooks that break the moment something changes. They’re transparent, customizable, and designed to evolve as analysts take new actions. This gives teams both consistency and flexibility, a balance many AI SOC platforms struggle to get right.

On investigations, Crogl doesn’t chase the “speed at all costs” narrative. Instead, the focus is on depth, consistency, and repeatability. Queries run as fast as your SIEM or data lake allows, but the system is designed to take the right investigative paths, verify results, and produce outcomes you can defend.

Finally, Crogl closes the loop with feedback. Analyst decisions feed back into the system, updating response plans and strengthening the knowledge graph. Over time, this builds a living model of how your team actually investigates and responds, rather than locking you into a static black box.

In short: Crogl is positioning itself as an investigation-first AI SOC platform. It’s enterprise-ready, analyst-friendly, and clearly built around the realities of fragmented data, complex processes, and the need for governance.

🏷️ Blog Sponsorship

Want to sponsor a future edition of the Cybersecurity Automation Blog? Reach out to start the conversation. 🤝

🗓️ Request a Services Call

If you want to get on a call and have a discussion about security automation, you can book some time here

Join as a top supporter of our blog to get special access to the latest content and help keep our community going.

As an added benefit, each Ultimate Supporter will receive a link to the editable versions of the visuals used in our blog posts. This exclusive access allows you to customize and utilize these resources for your own projects and presentations.