Disclaimer: Opinions expressed are solely my own and do not reflect the views or opinions of my employer or any other affiliated entities. Any sponsored content featured on this blog is independent and does not imply endorsement by, nor relationship with, my employer or affiliated organisations.

Article written in collaboration with Andrew Green

We often argue over semantics, but your agents shouldn't. They need explicit definitions for what data and events mean for your business. Otherwise they will refer back to their training data, which is often not applicable.

For example, a log from an on-prem Cisco router and an AWS VPC log are structured differently, but they both contain IP addresses, ports, protocols. An LLM can understand what these network elements mean and make general inferences about network requests. But how will it determine which instances of traffic between your on-prem environment and cloud are expected versus suspicious?

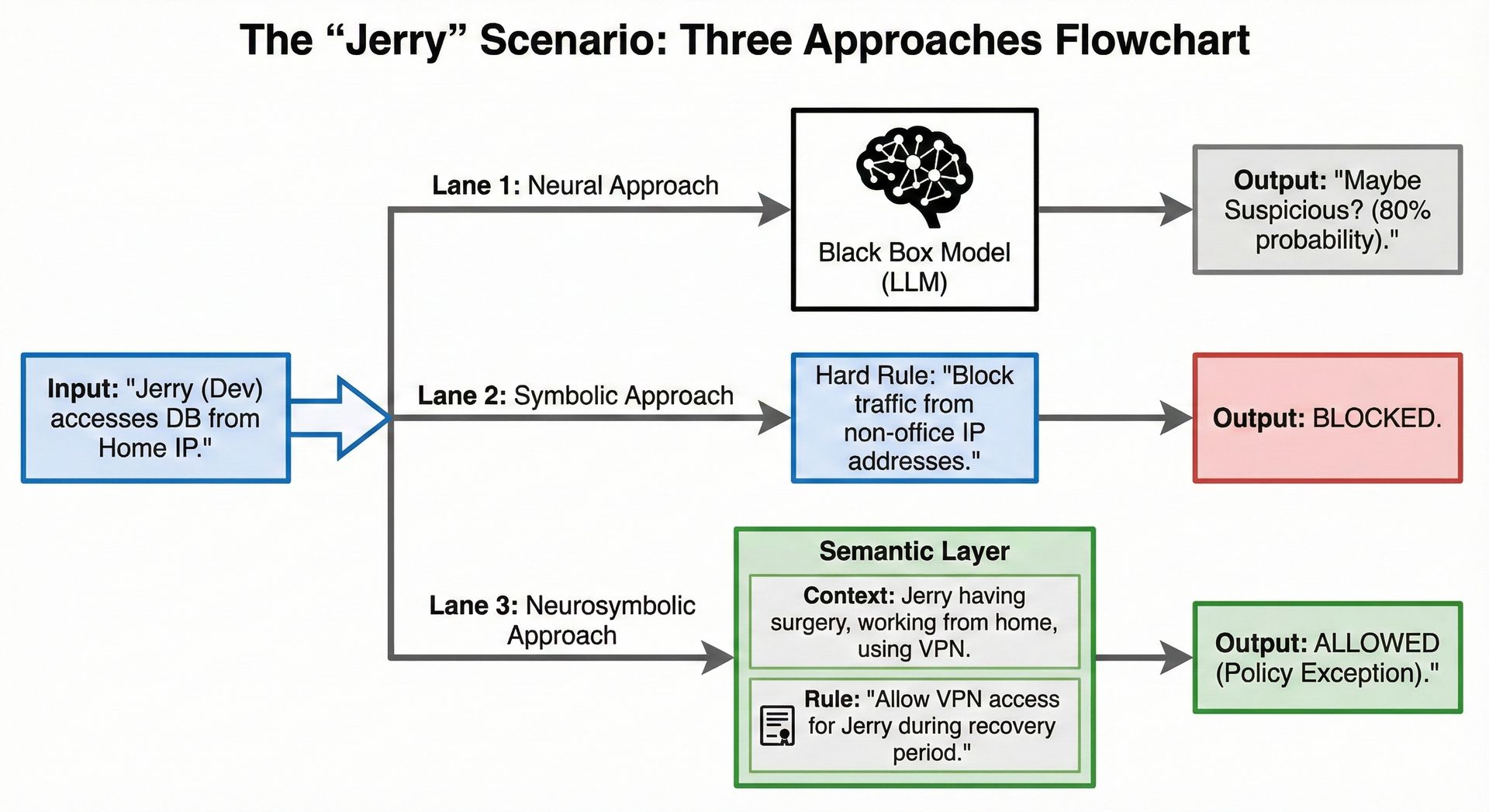

You've got three options here.

The first one is to ask the LLM: 'Hey, is this suspicious?', at which point the LLM will be using its training data and prompt context to give a statistically likely interpretation. This is the neural approach.

The second one is to define an explicit rule, such as alert if request.source_ip in "10.0.0.0/8" and request.destination_ip in "52.0.0.0/8". This is the symbolic approach.

The third one is to tell the LLM: 'Hey, our dev Jerry had a leg surgery and he won't leave the house while he heals, but he'll be working from home'. With the right tools, the LLM will now interpret the ambiguities of this message and combine them with hard rules to define an explicit instruction, where all of Jerry's access requests will be made from his VPN for the next 6 months, while anything else is suspicious. This is the neurosymbolic approach.

To get to this third option, we need a semantic layer.

The Fundamental Tension: Why We Need Semantic Layers

Before we define what semantic layers are, let's understand the problem they're solving. There's a fundamental tension in how we approach automated reasoning in security:

Pure Neural (LLM) Approach:

Excellent at pattern recognition and handling ambiguity

Can process natural language queries

Adaptable to new situations

BUT: Non-deterministic, expensive at scale, can't explain decisions

Pure Symbolic Approach:

Deterministic and auditable

Fast and efficient at scale

Provides clear reasoning chains

BUT: Brittle with edge cases, requires extensive rule maintenance

The semantic layer provides a foundation for hybrid approaches where symbolic reasoning handles the facts and constraints while neural networks handle the ambiguity and interface. It's the bridge between "Jerry is working from home while recovering" (natural language) and "source_ip = Jerry_VPN_IP AND time_range = next_6_months" (executable logic).

But What Is a Semantic Layer, Exactly?

A semantic layer is an LLM-queriable abstraction layer that pulls and correlates information about entities, their relationships, and the deterministic rules that govern their interactions.

It correlates raw technical data with business meaning through symbolic reasoning.

But what is symbolic reasoning?

Actually, what is a symbol?

A symbol is a notation that captures the meaning of a concept, such as 'putting two things together' is expressed by the symbol '+'. Humans and LLMs can use these symbols to read and define rules and instructions.

Symbolic reasoning therefore uses these notations to work out a conclusion.

At its core, a semantic layer must define the following three concepts:

Entities: identities (both human and workload), assets such as servers and applications, events like a GET request, and behaviors such as Dev (identity) makes a POST request (event) to a database (asset).

Relationships also known as relationship graphs or knowledge graphs, these map things like User X manages Asset Y, Asset Y hosts Application Z, Application Z processes Data Type W, Data Type W is subject to Regulation V.

Symbolic Reasoning: applying logic-based rules. You can trace exactly why a decision was made. Every step is auditable, testable, and deterministic.

a. IF user.role = "contractor"

b. AND access.time NOT IN business_hours

c. AND asset.classification = "confidential"

d. THEN risk_score = HIGH

Where Should Semantic Layers Live?

Considering most security activities revolve around gathering accurate and relevant data, where in the modern SecOps stack should this semantic layer be inserted?This is where theory meets painful reality, and where practitioners diverge sharply on the right approach.

There are four main architectural positions, each with compelling arguments and serious tradeoffs:

Option 1: In the Data Pipeline (Pre-SIEM)

The argument here is to do semantic processing before data ever reaches your SIEM or data lake.

Advantages:

Reduces data volume through intelligent filtering

Normalizes entities at the source

Cheaper than processing in expensive SIEM platforms

Can route data based on semantic understanding

Challenges:

Requires real-time processing at massive scale

Changes to semantic models require pipeline updates

Limited context (can't look at historical data easily)

Becomes another system to maintain

Verdict: This works well for basic entity resolution and enrichment, but struggles with complex relationship modeling that requires historical context.

Option 2: Within the SIEM Platform

Modern SIEMs increasingly claim to embed semantic capabilities directly.

Advantages:

Integrated with detection and investigation workflows

Access to all historical data for context

Single platform to manage

Vendor support (in theory)

Challenges:

Vendor lock-in to proprietary semantic models

Performance impacts on already-stressed SIEMs

Limited flexibility in modeling

Expensive processing at SIEM rates

Verdict: most SIEM vendors are adding "semantic" features, but they're often just rebranded lookup tables and data models. True semantic reasoning with relationship graphs and symbolic logic remains limited. XDR vendors have marketed this capability more aggressively, positioning themselves as solving the "semantic gap" problem, but implementation depth varies significantly.

Option 3: As a Separate Analytics Layer

Building a semantic layer that operates alongside or on top of your security data infrastructure.

Advantages:

Flexibility to model your specific environment

Can work across multiple data sources

Optimized for complex reasoning

Natural fit for AI/ML integration

Challenges:

Another platform to integrate and maintain

Potential latency for real-time use cases

Requires data federation or complex APIs

Organizational boundaries (who owns it?)

Verdict: This is where most successful implementations end up, but it requires significant investment and organizational commitment. Some SOAR platforms have evolved toward this model, building semantic layers with automation workflows and embedding business context in case management. AI SOC platforms are also exploring this space, though many are still in early stages of true semantic reasoning versus simple enrichment.

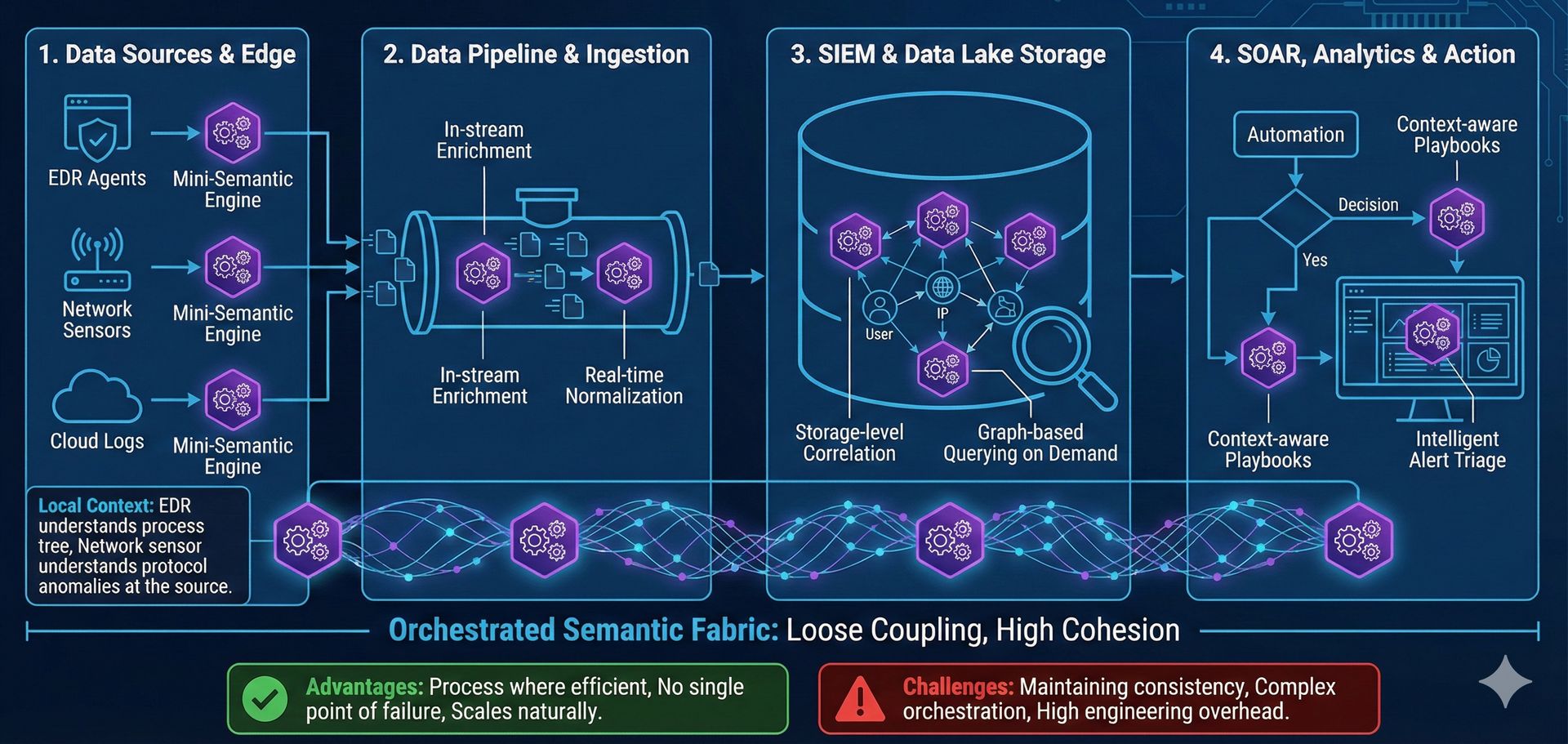

Option 4: Distributed Semantic Processing

The most ambitious approach - semantic capabilities distributed throughout your stack, with processing happening wherever it makes most sense.

Advantages:

Process data where it makes most sense

No single point of failure

Can optimize for different use cases

Scales naturally with infrastructure

Challenges:

Consistency across distributed models

Complex orchestration and governance

Difficult to maintain and update

Requires sophisticated engineering

Verdict: This is the "microservices of semantic layers" - sounds great in theory, nightmarish in practice for most organizations. You need mature DevOps practices and significant engineering resources to make this work.

How Semantic Layer Projects Fail

Let's be honest about the failure modes, because understanding these is more valuable than any architecture diagram:

1. Trying to Model Everything

Organizations attempt to create comprehensive ontologies of their entire environment. This is impossibly complex and never finishes. You don't need a complete knowledge graph of your infrastructure to get value from semantic layers. But you do not explicit information about what is modeled and what is not.

2. Ignoring Organizational Reality

Semantic models require agreement on basic concepts like "what is a critical asset?" Most organizations can't even agree on this informally, much less formally model it. The technical challenge is often easier than the political one.

3. Underestimating Maintenance

Semantic models aren't write-once. They require constant updates as your environment evolves. Without dedicated resources, they become stale and useless within months.

4. Over-Engineering the Solution

Building elaborate graph databases and reasoning engines when simple lookup tables would suffice for 80% of use cases. Start with the minimum viable semantic layer that solves your specific problems.

5. Lacking Clear Use Cases

Implementing semantic layers because they sound advanced, not because they solve specific problems. "We need better context" isn't a use case - "We need to automatically identify which database access is anomalous based on team structure and data classification" is.

The Practical Path Forward

If you're considering implementing semantic capabilities, here's what actually works:

Start Small and Specific

Pick one use case with clear business value. User-to-asset relationship modeling for access analysis. Application dependency mapping for incident scope. Data classification for DLP prioritization. Prove value before expanding.

Embrace Imperfect Models

Your semantic layer doesn't need to be complete or perfect. Even modeling 60% of your critical relationships provides massive value over having none. Accept that edge cases will exist.

Build for Maintenance

Plan for model updates from day one. Who owns entity definitions? How do relationships get updated? What's the approval process for changes? The technical implementation is secondary to the governance model.

Leverage Standards Where Possible

Don't reinvent entity definitions that already exist. Frameworks like OCSF provide common semantic models that reduce custom development. Think of these as starting points, not constraints.

Consider Buy vs Build Carefully

Building custom semantic layers requires significant engineering investment. Many organizations would be better served by vendor solutions, even with their limitations. Be honest about your capabilities and resources.

As well some great resources:

https://www.holistics.io/books/setup-analytics/data-modeling-layer-and-concepts/

Speculation on The Future of Semantic Layers in Security

Looking ahead, semantic layers will evolve in three key directions:

Standardization

Common semantic models will reduce the need for custom development. We'll share entity definitions and relationship structures like we share threat intelligence today. OCSF and similar frameworks are laying this groundwork.

Automation

ML will help maintain semantic models by learning from patterns and proposing updates. Instead of manually defining every relationship, you'll validate what the system discovers. Humans will shift from creation to curation.

Distributed Intelligence

Rather than centralizing all semantic reasoning in one layer, we'll see semantic capabilities embedded throughout security infrastructure. Your EDR will understand user context, your SIEM will reason about asset relationships, your SOAR will make decisions based on business impact. The semantic layer becomes infrastructure, not a product.

The organizations that succeed won't be those with the most sophisticated semantic models, but those that solve specific problems with appropriately scoped semantic reasoning. Start with Jerry and his VPN, not with a complete ontology of your enterprise.

Join as a top supporter of our blog to get special access to the latest content and help keep our community going.

As an added benefit, each Ultimate Supporter will receive a link to the editable versions of the visuals used in our blog posts. This exclusive access allows you to customize and utilize these resources for your own projects and presentations.